Feature engineering refers to manipulation — addition, deletion, combination, mutation — of your data set to improve machine learning model training, leading to better performance and greater accuracy. Effective feature engineering is based on sound knowledge of the business problem and the available data sources.

Creating new features gives you a deeper understanding of your data and results in more valuable insights. When done correctly, feature engineering is one of the most valuable techniques of data science, but it is also one of the most challenging. A common example of feature engineering is when your doctor uses your body mass index (BMI). BMI is calculated from both body weight and height, and serves as a surrogate for a characteristic that is very hard to accurately measure: the proportion of lean body mass.

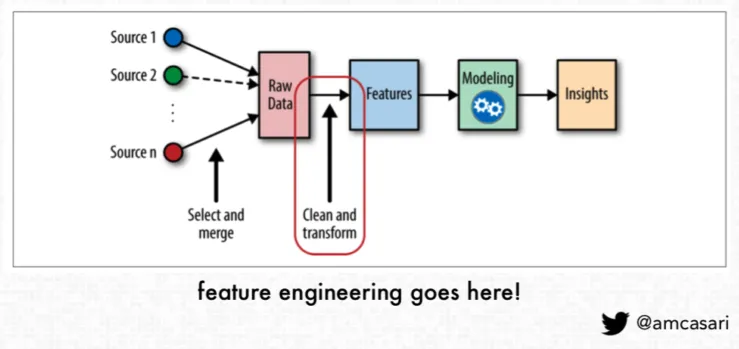

Feature Engineering in ML Lifecycle

Some common types of feature engineering include:

- Scaling and normalization means adjusting the range and center of data to ease learning and improve the interpretation of the results.

- Filling missing values implies filling in null values based on expert knowledge, heuristics, or by some machine learning techniques. Real-world datasets can be missing values due to the difficulty of collecting complete datasets and because of errors in the data collection process.

- Feature selection means removing features because they are unimportant, redundant, or outright counterproductive to learning. Sometimes you simply have too many features and need fewer.

- Feature coding involves choosing a set of symbolic values to represent different categories. Concepts can be captured with a single column that comprises multiple values, or they can be captured with multiple columns, each of which represents a single value and has a true or false in each field. For example, feature coding can indicate whether a particular row of data was collected on a holiday. This is a form of feature construction.

- Feature construction creates a new feature(s) from one or more other features. For example, using the date you can add a feature that indicates the day of the week. With this added insight, the algorithm could discover that certain outcomes are more likely on a Monday or a weekend.

- Feature extraction means moving from low-level features that are unsuitable for learning — practically speaking, you get poor testing results — to higher-level features that are useful for learning. Often feature extraction is valuable when you have specific data formats — like images or text — that have to be converted to a tabular row-column, example-feature format.